- Details

-

Published: Monday, October 18 2021 10:46

Carbon is not the shiniest element, nor the most reactive, nor the rarest. But it is one of the most versatile.

Carbon is the backbone of life on earth and the fossil fuels that have resulted from the demise of ancient life. Carbon is the essential ingredient for turning iron into steel, which underlies technologies from medieval swords to skyscrapers and submarines. And strong, lightweight carbon fibers are used in cars, planes and windmills. Even just carbon on its own is extraordinarily adaptable: It is the only ingredient in (among other things) diamonds, buckyballs(link is external) and graphite(link is external) (the stuff used to make pencil lead).

This last form, graphite, is at first glance the most mundane, but thin sheets of it host a wealth of uncommon physics. Research into individual atom-thick sheets of graphite—called graphene(link is external)—took off after 2004 when scientists developed a reliable way to produce it (using everyday adhesive tape to repeatedly peel layers apart). In 2010 early experiments demonstrating the quantum richness of graphene earned two researchers the Nobel Prize in physics.

In recent years, graphene has kept on giving. Researchers have discovered that stacking layers of graphene two or three at a time (called, respectively, bilayer graphene or trilayer graphene) and twisting the layers relative to each other opens fertile new territory for scientists to explore. Research into these stacked sheets of graphene is like the Wild West, complete with the lure of striking gold and the uncertainty of uncharted territory.

Researchers at JQI and the Condensed Matter Theory Center (CMTC) at the University of Maryland, including JQI Fellows Sankar Das Sarma and Jay Sau and others, are busy creating the theoretical physics foundation that will be a map of this new landscape. And there is a lot to map; the phenomena in graphene range from the familiar like magnetism to more exotic things like strange metallicity(link is external), different versions of the quantum Hall effect, and the Pomeranchuk effect—each of which involve electrons coordinating to produce unique behaviors. One of the most promising veins for scientific treasure is the appearance of superconductivity (lossless electrical flow) in stacked graphene.

“Here is a system where almost every interesting quantum phase of matter that theorists ever could imagine shows up in a single system as the twist angle, carrier density, and temperature are tuned in a single sample in a single experiment,” says Das Sarma, who is also the Director of the CMTC. “Sounds like magic or science fantasy, except it is happening every day in at least ten laboratories in the world.”

The richness and diversity of the electrical behaviors in graphene stacks has inspired a stampede of research. The 2021 American Physical Society March Meeting included 13 sessions addressing the topics of graphene or twisted bilayers, and Das Sarma hosted a day long virtual conference in June for researchers to discuss twisted graphene and the related research inspired by the topic. The topic of stacked graphene is extensively represented in scientific journals, and the online arXiv preprint server has over 2,000 articles posted about “bilayer graphene”—nearly 1,000 since 2018.

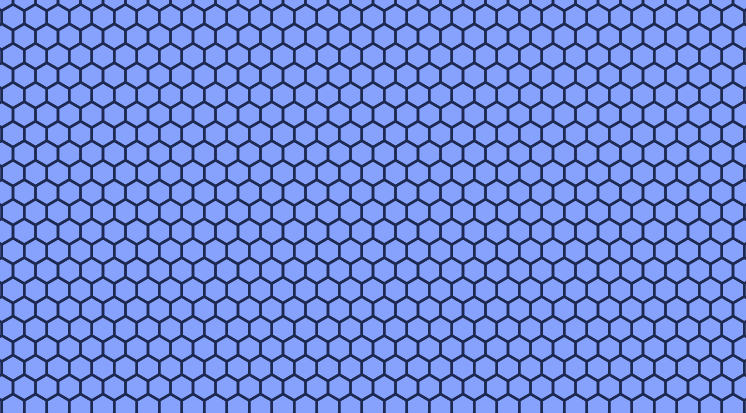

Perhaps surprisingly, graphene’s wealth of quantum research opportunities is tied to its physical simplicity. In a sheet of graphene, a carbon atom sits at the corner of each hexagon. (Credit: Paul Chaikin with modifications by Bailey Bedford)

In a sheet of graphene, a carbon atom sits at the corner of each hexagon. (Credit: Paul Chaikin with modifications by Bailey Bedford)

Graphene is a repeating honeycomb sheet with a carbon atom residing at every corner. The carbon atoms hold strongly to one another, making imperfections in the pattern uncommon. Each carbon atom contributes an electron that can freely move between atoms, and electrical currents are very good at traveling through the resulting sheets. Additionally, graphene is lightweight, has a tensile strength(link is external) that is more than 300 times greater than that of steel and is unusually good at absorbing light. These features make it convenient to work with, and it is also easy to obtain.

Graphene’s pure, consistent structure is an excellent embodiment of the physics ideal of a two-dimensional solid material. This makes it the perfect playground for understanding how quantum physics plays out in the material without the researchers having to worry about complications from the additional mess that occurs in most materials. There are then a variety of new properties that are unlocked by stacking layers of graphene on top of each other. Each layer can be rotated (by what scientists call a “twist angle”) or shifted relative to the hexagonal pattern of its neighbors.

Graphene’s structural and electrical properties make it easy to change the quantum landscape that electrons experience in an experiment, giving researchers several options for how to customize, or tune, graphene’s electrical properties. Combining these basic building blocks has already resulted in a wealth of different results, and they aren’t done experimenting.

A ‘Magical’ Flourish

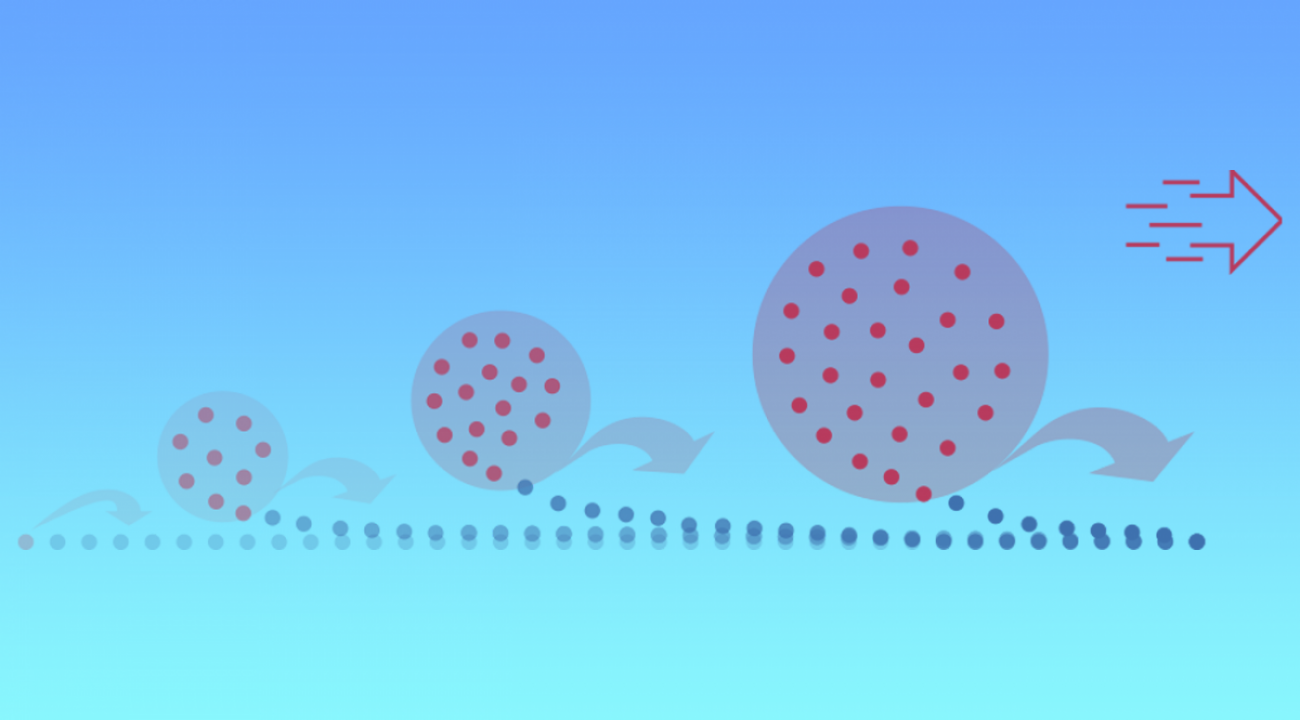

In the quantum world of electrons in graphene, the way that layers sit atop one another is important. When adjacent sheets in a bilayer are twisted with respect to each other, some atoms in the top sheet end up almost right above their corresponding neighbor while in other places atoms end up far away (on an atomic scale) from any atom in the other sheet. These differences form giant, repeating patterns similar to the distribution of atoms in the single sheet but over a much longer scale, as shown in the image at the top of the story and in the interactive visual bellow.

Every change of the angle also changes the scale of the larger pattern that forms the quantum landscape through which the electrons travel. The quantum environments formed by various repeating patterns (or a lack of any organization) are one of the main reasons that electrons behave differently in various materials; in particular, a material’s quantum environment dictates the interactions electrons experience. So each miniscule twist of a graphene layer opens a whole new world of electrical possibilities.

“This twist is really a new tuning knob that was absent before the discovery of these 2D materials,” says Fengcheng Wu, who has worked on graphene research with Das Sarma as a JQI and CMTC postdoc and now collaborates with him as a professor at Wuhan University in China. “In physics, we don't have too many tuning knobs. We have temperature, pressure, magnetic field, and electric field. Now we have a new tuning knob which is a big thing. And this twist angle also provides new opportunities to study physics.”

Researchers have discovered that at a special, small twist angle (about 1.1 degrees)—whimsically named the “magic angle”—the environment is just right to create strong interactions that radically change its properties. When that precise angle is reached, the electrons tend to cluster around certain areas of the graphene, and new electrical behaviors suddenly appear as if summoned with a dramatic magician’s flourish. Magic angle graphene behaves as a poorly-conducting insulator in some circumstances and in other cases goes to the opposite extreme of being a superconductor—a material that transports electricity without any loss of energy.

The discovery of magic-angle graphene and that it has certain quantum behaviors similar to a high-temperature superconductor was the Physics World 2018 Breakthrough of the Year. Superconductors have many valuable potential uses, like revolutionizing energy infrastructure and making efficient maglev trains. Finding a convenient, room-temperature superconductor has been a holy grail for scientists.

The discovery of a promising new form of superconductivity and a plethora of other electrical oddities, all with a convenient new knob to play with, are significant developments, but the most exciting thing for physicists is all the new questions that the discoveries have raised. Das Sarma has investigated many aspects of layered graphene, resulting in more than 15 papers on the topic since 2019; he says two of the questions that most interest him are how graphene becomes superconducting and how it becomes magnetic.

“Various graphene multilayers are turning out to be a richer playground for physics than any other known condensed matter or atomic collective system—the occurrence of superconductivity, magnetism, correlated insulator, strange metal here is coupled with an underlying nontrivial topology, providing an interplay among interaction, band structure, and topology which is unique and unprecedented,” says Das Sarma. “The subject should remain in the forefront of research for a long time."

Strange Bedfellows

Scientists have known about superconductivity and magnetism for a long time, but graphene isn’t where they expected to find them. Finding both individually was a surprise, but scientists have also found the two phenomena occurring simultaneously in some experiments.

Superconductivity and magnetism are usually antagonists, so their presence together in a graphene stack suggests there is something unusual happening. Researchers, like Das Sarma, hope that uncovering which interactions lead to these phenomena in graphene will give them a deeper understanding of the underlying physics and maybe allow them to discover more materials with exotic and useful properties.

A hint at the treasure possibly waiting to be discovered are measurements of twisted bilayer graphene’s electrical properties, which resemble behaviors seen in certain high-temperature superconductors. This suggests that graphene might be crucial to solving the mysteries surrounding high-temperature superconductivity.

The current clues point to the peculiarities of electron interactions being the key to understanding the topic. Superconductivity requires electrons to pair up, so the interactions that drive the pairing in graphene stacks are naturally of interest.

In an article published in Physical Review B(link is external), Das Sarma, Wu and Euyheon Hwang, who was formerly a JQI research scientist and is now a professor at Sungkyunkwan University in South Korea, proposed that what binds pairs of electrons in twisted bilayer graphene may be surprisingly mundane. They think the pairing mechanism may be the same as that in the most well understood superconductors. But they also think that the conventional origin may result in unconventional pairs.

Fengcheng Wu (Credit: Fengcheng Wu)Their analysis suggests that it is not just the interactions that electrons have with each other that are enhanced at the magic angle but also the electron’s interactions with vibrations of the carbon atoms. The vibrations, called phonons, are the quantum mechanical version of sound and other vibrations in materials.

Fengcheng Wu (Credit: Fengcheng Wu)Their analysis suggests that it is not just the interactions that electrons have with each other that are enhanced at the magic angle but also the electron’s interactions with vibrations of the carbon atoms. The vibrations, called phonons, are the quantum mechanical version of sound and other vibrations in materials.

In the best understood superconductors, it is phonons that bind electrons into pairs. In these superconductors, the partnered electrons are required to have opposite values of their spin—a quantum property related to how quantum particles orient themselves in a magnetic field. But the team’s theory suggests that in graphene this traditional pairing mechanism can not only pair electrons with opposite spins but also pair electrons with the same spin. Their description of the pairing method provides a possible explanation to help understand superconductivity in twisted bilayer graphene and graphene-based materials more generally.

“Unconventional superconductivity is highly sought after in physics, as it is exotic on its own and may also find applications in topological quantum computing,” says Wu. “Our theory provides a conventional mechanism towards unconventional superconductivity.”

More recently, Das Sarma, Sau, Wu and Yang-Zhi Chou, who is a JQI and CMTC post-doctoral researcher, collaborated to develop a tool to help scientists understand a variety of graphene stacks. A paper on this research(link is external) was recently accepted in Physical Review Letters. They made a theoretical framework to explore the way that electrons behave on a hexagonal grid. They were inspired by experiments on magic-angle twisted trilayer graphene. Twisted trilayer graphene has the middle layer twisted relative to the top and bottom layers, like a cheese sandwich with the slice twisted so that the corners stick out. This graphene sandwich has attracted attention because it hosts superconductivity at a higher temperature than the two-stack version.

The team’s theoretical model provides a description of the electrons’ behavior in a particular quantum world. Using it on the case of twisted trilayer graphene, they showed that the uncommon pairing of electrons with the same spin could dominate the electrons behavior and be the source of twisted trilayer graphene’s superconductivity.

This new tool provides a starting place for investigating other graphene experiments. And the way the identified pairing mechanism influences the electrons may be significant in future discussions of the role of magnetism in graphene experiments.

Magnetism in stacked graphene is its own mysterious magic trick. Magnetism isn’t found in graphite or single layers of graphene but somehow appears when the stacks align. It’s especially notable because superconductivity and magnetism normally can’t coexist in a material the way they appear to in graphene stacks.

“This unconventional superconducting state in twisted trilayer graphene can resist a large magnetic field, a property that is rarely seen in other known superconducting materials,” says Chou.

In another article in Physical Review B(link is external), Das Sarma and Wu tackled the conundrum of the simultaneous presence of both superconductivity and magnetism in twisted double bilayer graphene—a system like bilayer graphene but where the twist is between two pairs of aligned graphene sheets (for a total of four sheets). This construction with additional layers has attracted attention because it creates a quantum environment that is more sensitive than a basic bilayer to an electric field applied through the stack, giving researchers a greater ability to tweak the superconductivity and magnetism and observe them in different quantum situations.

In the paper, the team provides an explanation for the source of magnetism and how an applied electric field could produce the observed change to a stack’s magnetic behavior. They believe the magnetism arises in a completely different way than it does in more common magnets, like iron-based refrigerator magnets. In an iron magnet, the individual iron atoms each have their own small magnetic field. But the team believes that in graphene the carbon atoms aren’t becoming magnetic. Instead, they think the magnetism comes from electrons that are freely moving throughout the sheet.

Their theory suggests that double bilayer graphene becomes magnetic because of how the electrons push each other apart better in the particular quantum environment. This additional push could lead to the electrons coordinating their individual magnetic fields to make a larger field.

The coordination of electron spins might also be relevant to the pairing of electrons and the formation of potential superconductivity. Spin can be imagined as an arrow that wants to line up with any surrounding magnetic field. Superconductivity normally fails when the magnetism is strong enough that it tears apart the two opposite facing spins. But both spins being aligned in the pairs would explain the two phenomena peacefully coexisting in graphene experiments.

Around the Next Twist in the River

While these theories serve as a guide for researchers pushing forward into the uncharted territory of graphene research, they are far from being a definitive map. At the conference Das Sarma organized in June, a researcher presented new observations(link is external) of superconductivity in three stacked graphene sheets without any twist(link is external). These stacks offset so that none of the layers are right on top of each other; each hexagon has some of its carbon atoms placed at the center of the other layers’ hexagons. The experiment(link is external) revealed two distinct areas of superconductivity, one of which is disturbed by magnetism and the other not. This suggests that the twist may not be the magical ingredient that produces all of the exotic phenomena, but it also raises new questions, offers a route for identifying which electronic behaviors are created or enhanced by the “magic” twist, and provides a new opportunity to investigate the fundamental sources of the underlying physics. Yang-Zhi Chou (Credit: Yang-Zhi Chou)

Yang-Zhi Chou (Credit: Yang-Zhi Chou)

Inspired by this work and previous observations of magnetism in the same collaboration of Das Sarma, Sau, Wu and Chou mathematically explored the way phonon coupling of electrons might be playing out in these twist-less stacks. The team’s analysis suggest that phonon pairing is the likely driver of both types of superconductivity, with one occurring with matching spins and one with opposite spins. This work(link is external), led by Chou, was recently accepted in Physical Review Letters and has been chosen as a PRL Editors' Suggestion.

These results represent only a fraction of work on graphene experiments at JQI and the CMTC, and many other researchers have tackled additional aspects of this rich topic. But there remains much to discover and understand before the topic of layered graphene is charted and tamed territory. These early discoveries hint that as researchers dig deeper, they may uncover new veins of research representing a wealth of opportunities to understand new physics and maybe even develop new technologies.

“Applications are hard to predict, but the extreme tunability of these systems showing so many different phases and phenomena makes it likely that there could be applications,” Das Sarma says. “At this stage, it is very exciting fundamental research.”

Original story by Bailey Bedford: https://jqi.umd.edu/news/graphenes-magic-act-relies-on-small-twist

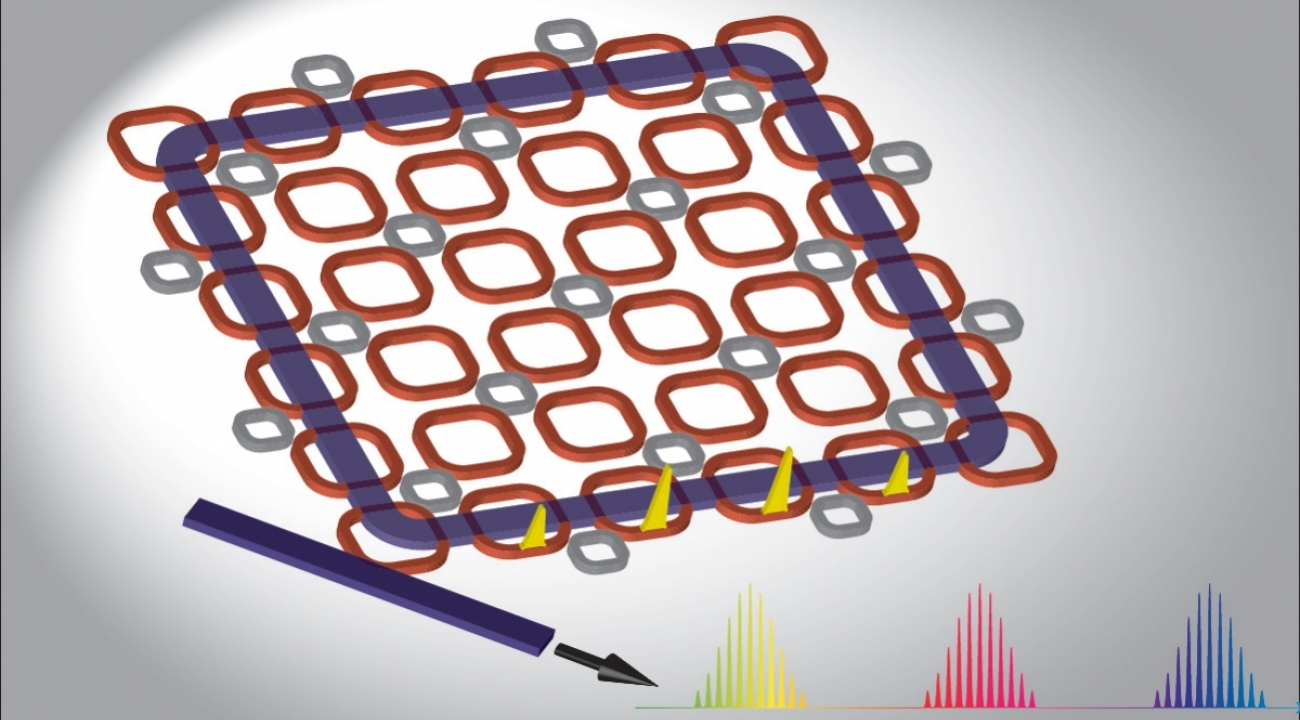

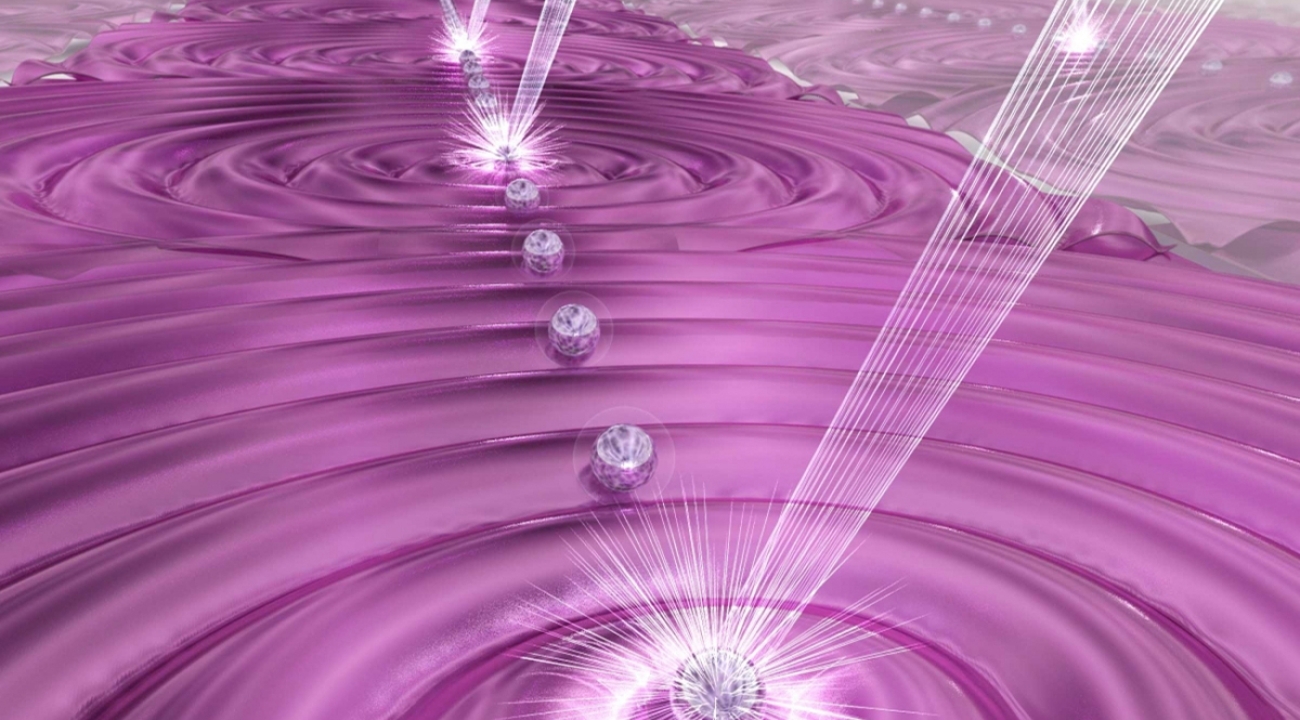

Researchers have demonstrated a new way for atomic ions to host disturbances that do not fade away. (credit: E. Edwards/JQI)

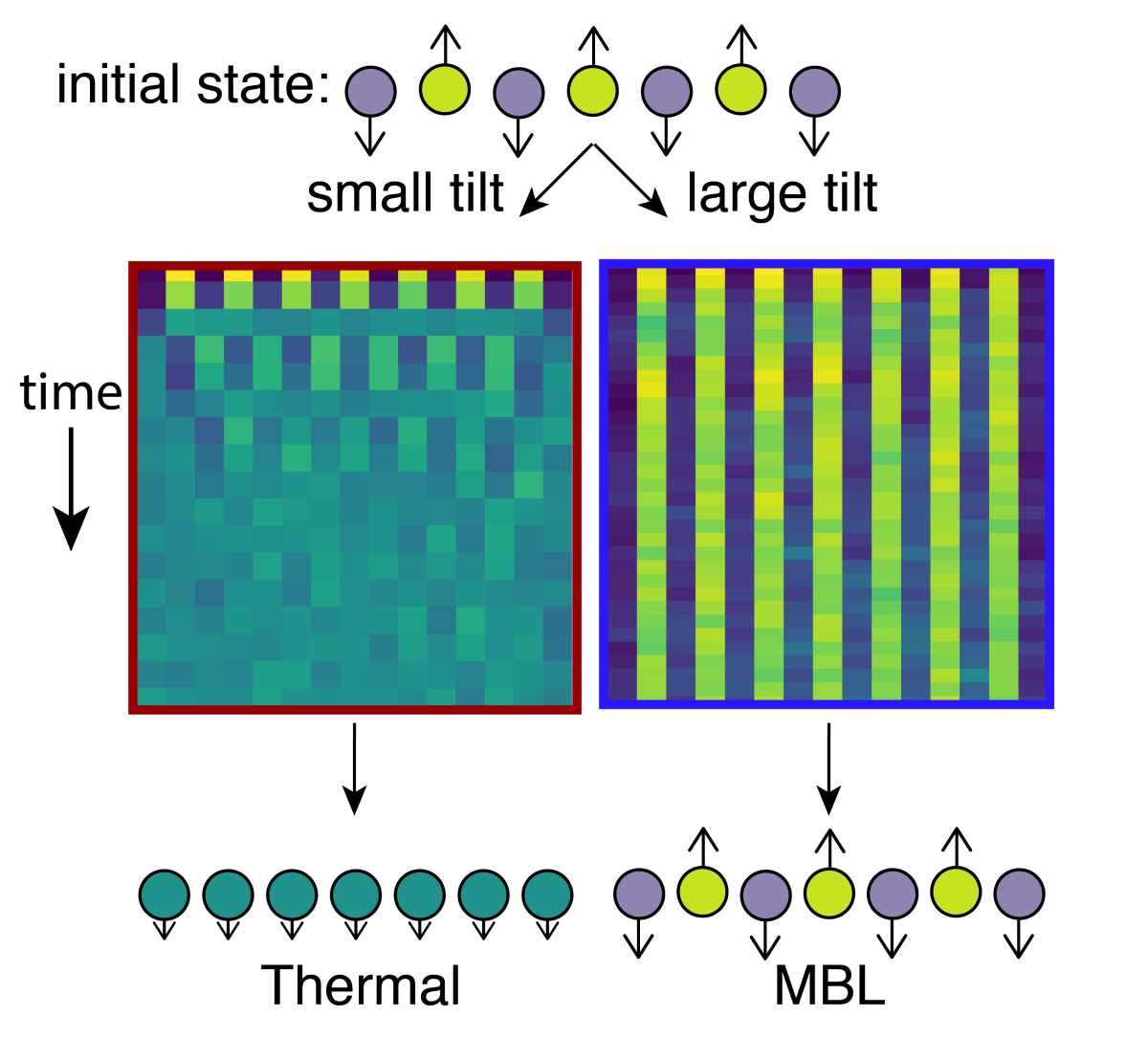

Researchers have demonstrated a new way for atomic ions to host disturbances that do not fade away. (credit: E. Edwards/JQI) Normally, ion spins that start out pointing in opposite directions will interact and reach an equilibrium, with no trace of where they started. But when the tilt in their container is large enough, they keep pointing in their original direction, creating a many-body localized state that remembers its initial configuration. (Credit: Adapted from article by the authors/JQI)day, the egg-carton dimples become offset up or down from each other in a random way, paralyzing each spin in its spot.

Normally, ion spins that start out pointing in opposite directions will interact and reach an equilibrium, with no trace of where they started. But when the tilt in their container is large enough, they keep pointing in their original direction, creating a many-body localized state that remembers its initial configuration. (Credit: Adapted from article by the authors/JQI)day, the egg-carton dimples become offset up or down from each other in a random way, paralyzing each spin in its spot.