Quantum Simulation Stars Light in the Role of Sound

- Details

- Published: Thursday, July 30 2020 05:33

Inside a material, such as an insulator, semiconductor or superconductor, a complex drama unfolds that determines the physical properties. Physicists work to observe these scenes and recreate the script that the actors—electrons, atoms and other particles—play out. It is no surprise that electrons are most frequently the stars in the stories behind electrical properties. But there is an important supporting actor that usually doesn’t get a fair share of the limelight.

This underrecognized actor in the electronic theater is sound, or more specifically the quantum mechanical excitations that carry sound and heat. Scientists treat these quantized vibrations as quantum mechanical particles called phonons(link is external). Similar to how photons are quantum particles of light, phonons are quantum particles of sound and other vibrations in a solid. Phonons are always pushing and pulling on electrons, atoms or molecules and producing new interactions between them.

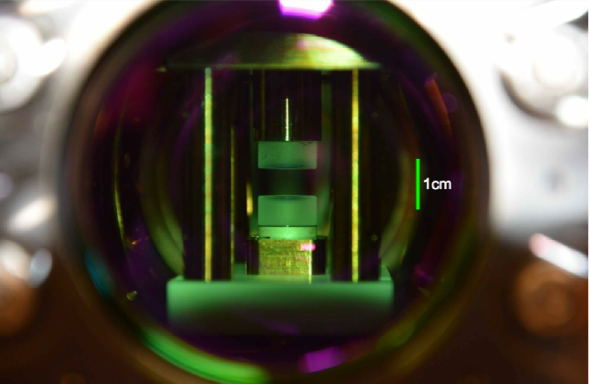

The role that phonons play in the drama can be tricky for researchers to suss out. And sometimes when physicists identify an interesting story to study, they can’t easily find a material with all the requisite properties or of sufficient chemical purity. Cigar shaped clouds of atoms (pink) are levitated in a chamber where an experiment uses light to recreate behavior that normally is mediated by quantum particles of sound. (Credit: Yudan Guo, Stanford)

Cigar shaped clouds of atoms (pink) are levitated in a chamber where an experiment uses light to recreate behavior that normally is mediated by quantum particles of sound. (Credit: Yudan Guo, Stanford)

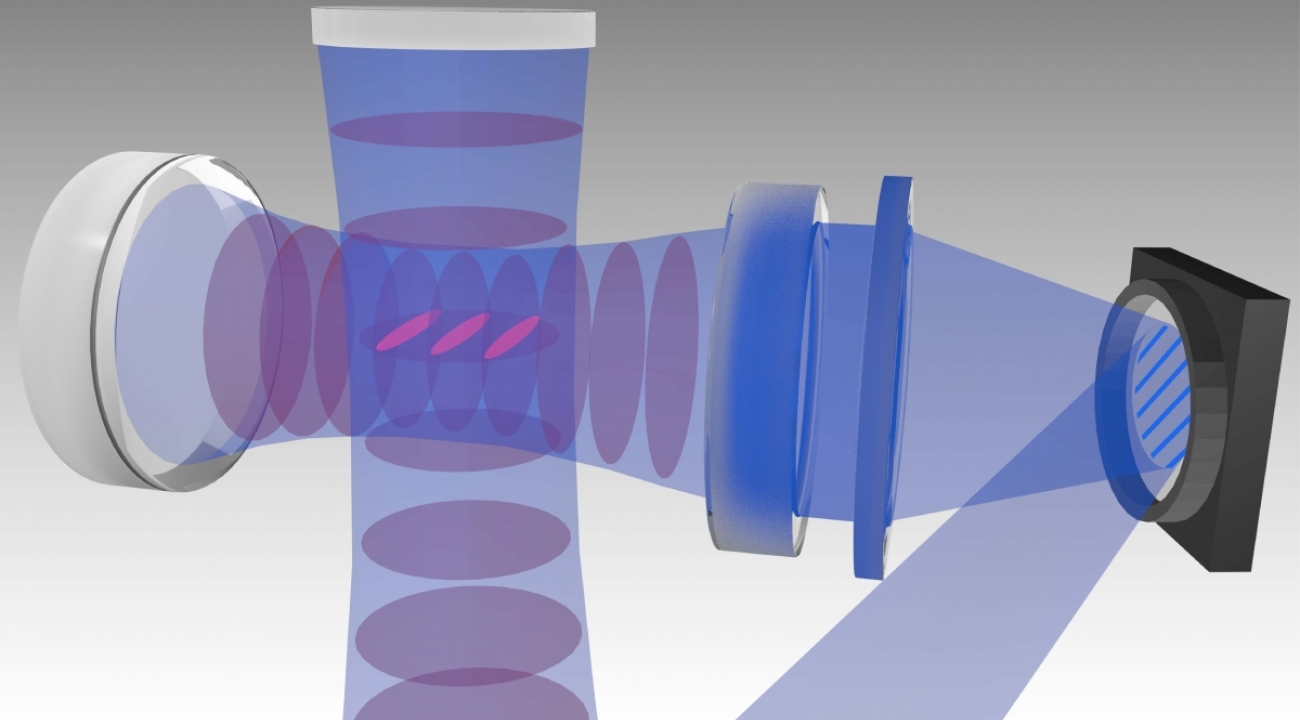

To help overcome the challenges of working directly with phonons in physical materials, Professor Victor Galitski, Joint Quantum Institute (JQI) postdoctoral researcher Colin Rylands and their colleagues have cast photons in the role of phonons in a classic story of phonon-driven physics. In a paper published recently in Physical Review Letters(link is external), the team proposes an experiment to demonstrate photons adequacy as an understudy and describes the setup to make the show work.

“The key idea came from an interdisciplinary collaboration that led to the realization that seemingly unrelated electron-phonon systems and neutral atoms coupled to light may share the exact same mathematical description,” says Galitski. “This new approach promises to deliver a treasure trove of exciting phenomena that can be transplanted from material physics to light-matter cavity systems and vice versa."

The Stage

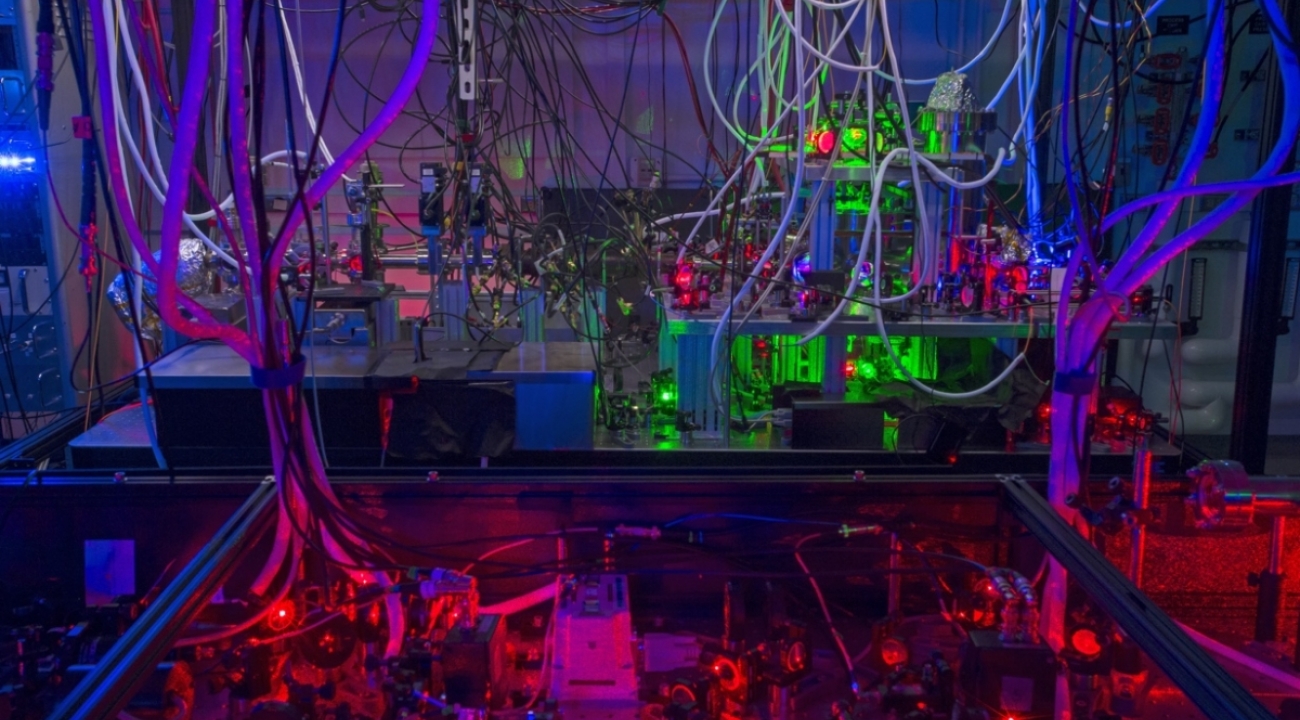

Galitski and colleagues propose using a very carefully designed mirrored chamber—like coauthor Benjamin Lev has in his lab at Stanford University—as the stage where photons can take on the role of phonons. This type of chamber, called an optical cavity, is designed to hold light for a long time by bouncing it between the highly-reflective walls.

“We made cavities where if you stick your head in there—of course it's only a centimeter wide—you would see yourself 50,000 times,” says Lev. “Our mirrors are very highly polished and so the reflections don't rapidly decay away and get lost.”

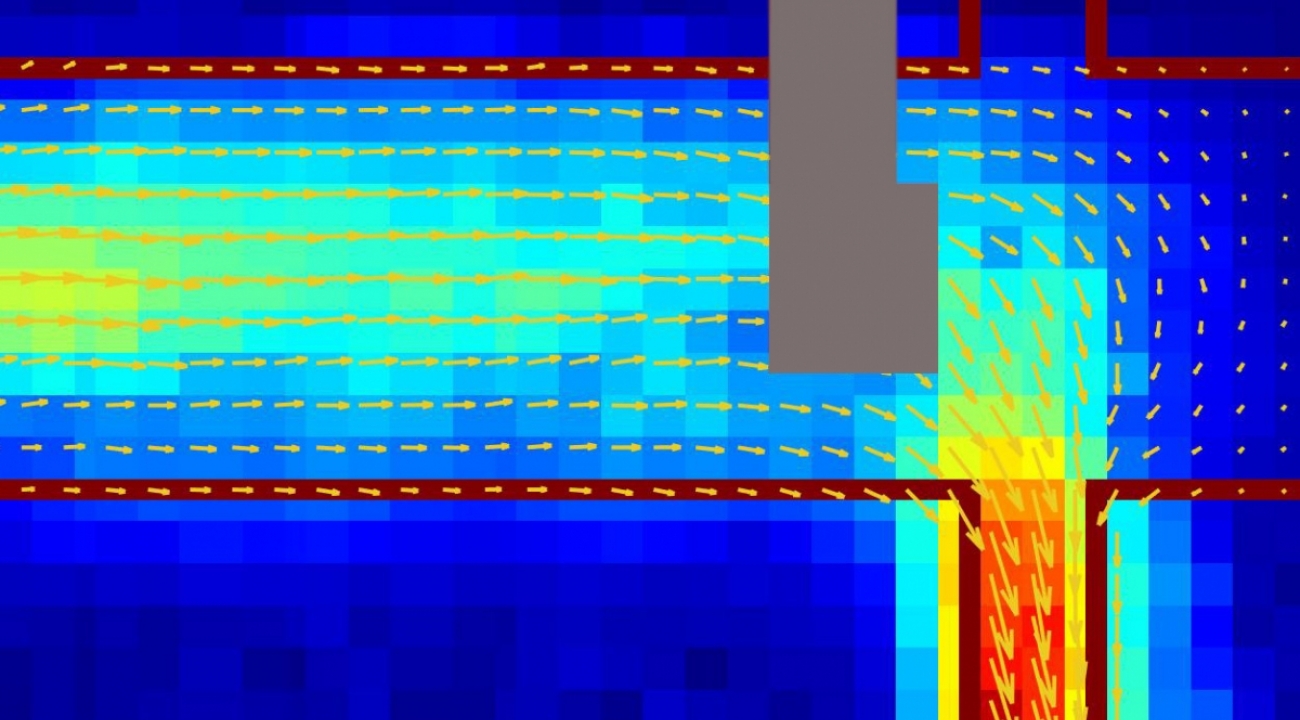

In an optical cavity, the bouncing light can hold a cloud of atoms in a pattern that mimics the lattice of atoms in a solid. But if a cavity is too simple and can only contain a single light pattern—a mode—the lattice is frozen in place. The light has to be able to take on many modes to simulate the natural distortions of phonons in the material.

To create more dynamic stories with phonons, the team suggests using multimode confocal cavities. “Multimode confocal” basically means the chamber is shaped with unforgiving precision so that it can contain many distinct spatial distributions of light.

“If it were just a normal single-mode cavity—two curved mirrors spaced at some arbitrary distance from one another— it would only be a Gaussian shape that could bounce back and forth and would be kind of boring; your face would be really distorted,” says Lev. “But if you stick your face in our cavities, your face wouldn’t look too different—it looks a little different, but not too different. You can support most of the different shapes of the waveform of your face, and that will bounce back and forth.”

Mirrors with a green tint can be seen inside a small experimental cavity.

Mirrors with a green tint can be seen inside a small experimental cavity. View of the cavity mirrors that serve as a stage for quantum simulations where light takes on the role of sound. (Credit: LevLab, Stanford)

The variety of light distributions that these special cavities can harbor, along with the fact that the photons can interact with one atom and then get reflected back to a different atom, allows the researchers to create many different interactions in order to cast the light as phonons.

“It's that profile of light in the cavity which is playing the role of the phonons,” says Jonathan Keeling, a coauthor of the paper and a physicist at the University of St Andrews. “So the equivalent of the lattice distorting in one place is that this light is more intense in this place and less intense in another place.”

The Script

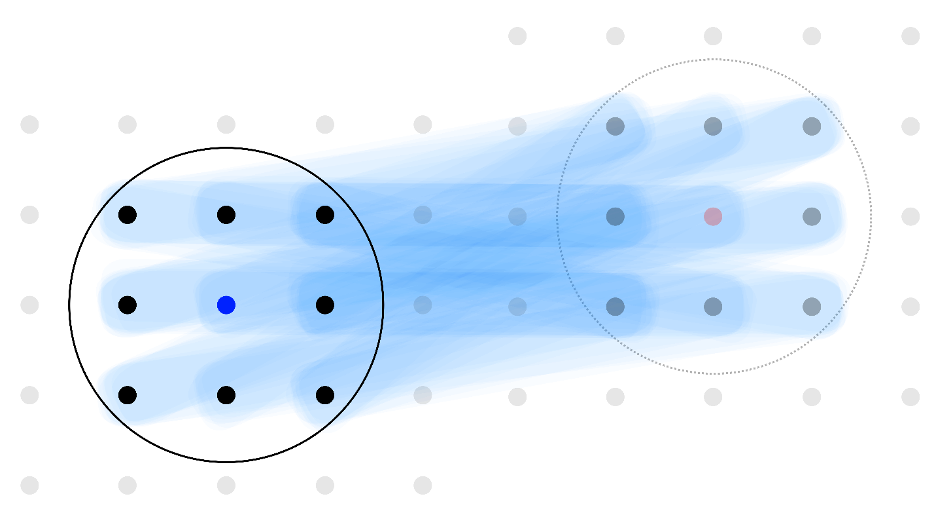

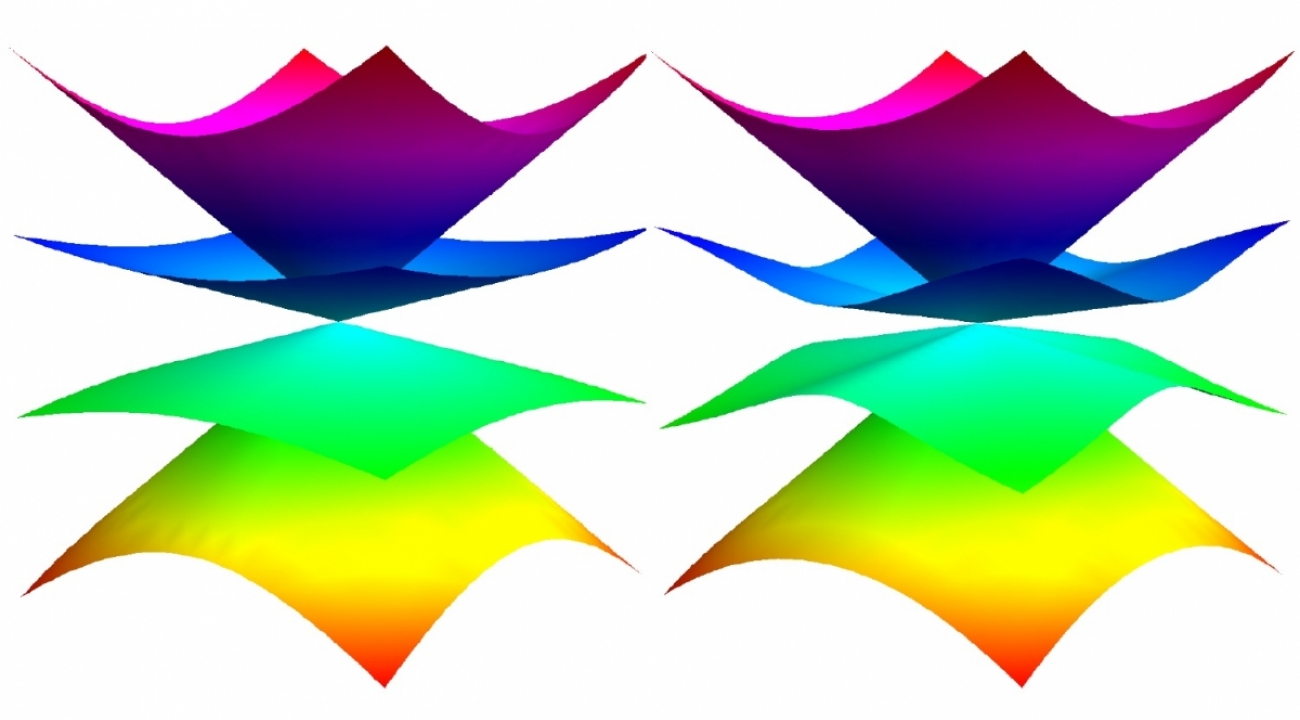

In the paper, the team proposes the first experiment to perform with these multimode confocal cavities—the first play to premier on the new stage. It’s a classic of condensed matter physics: the Peierls transition. The transition occurs in one-dimensional chains of particles with an attractive force between them. The attractive force leads the particles to pair up so that they form a density wave—two close particles and a space followed by two close particles and a space, on and on. Even a tiny pull between particles creates an instability that pulls them into pairs instead of distributing randomly.

In certain materials, the attractive pull from phonons is known to trigger a dramatic electrical effect through the Peierls transition. The creation of the density wave makes it harder for electrons to move through a material—resulting in a sudden transition from conductor to insulator.

“The Peierls transition is mathematically very similar to, but less well known than, superconductivity,” says Rylands. “And, like in superconducting systems, or many other systems that you would study in a solid-state lab, all these different phases of matter are driven by the interactions between phonons and the electrons.”

To recast the phonons as light the team has to also recast the electrons in the 1D material as cigar shaped clouds of atoms levitated in the chamber, as shown in the image above. But in this case, they don’t have a sudden cut off of an electrical current to conveniently signal that the transition occurred like in the traditional experiments with solids. Instead, they predicted what the light exiting the cavity should look like if the transition occurs.

Opening Night

The authors say that the proposed experiment will debut in cavities in Lev’s lab.

“It’s kind of nice when you have an experimentalist on a theory paper—you can hold the theorists’ feet to the fire and say, ‘Well, you know, that sounds great, but you can't actually do that,’” says Lev. “And here we made sure that you can do everything in there. So really, it's a quite literal roadmap to what we're going to do in the near future.”

If this experiment lines up with their predictions, it will give them confidence in the system’s ability to reveal new physics through other simulations.

There is competition for which shows will get the chance to take central stage, since there are many different scripts of physical situations that researchers can use the cavities to explore. The technique has promise for creating new phases of matter, investigating dynamic quantum mechanical situations, and understanding materials better. The cavities as stages for quantum simulations put researchers in the director’s chair for many quantum shows.

Original story by Bailey Bedford: https://jqi.umd.edu/news/quantum-simulation-stars-light-role-sound

A note from the researchers: The JQI and Stanford collaborators are especially grateful for support from the US Army Research Office, and discussions with Dr. Paul Baker at US-ARO, that made this work possible.

In addition to Galitski, Rylands, Lev and Keeling, Stanford graduate student Yudan Guo was also a co-author of the paper.