Currently, computing technologies are rapidly evolving and reshaping how we imagine the future. Quantum computing is taking its first toddling steps toward delivering practical results that promise unprecedented abilities. Meanwhile, artificial intelligence remains in public conversation as it’s used for everything from writing business emails to generating bespoke images or songs from text prompts to producing deep fakes.

Some physicists are exploring the opportunities that arise when the power of machine learning—a widely used approach in AI research—is brought to bear on quantum physics. Machine learning may accelerate quantum research and provide insights into quantum technologies, and quantum phenomena present formidable challenges that researchers can use to test the bounds of machine learning.

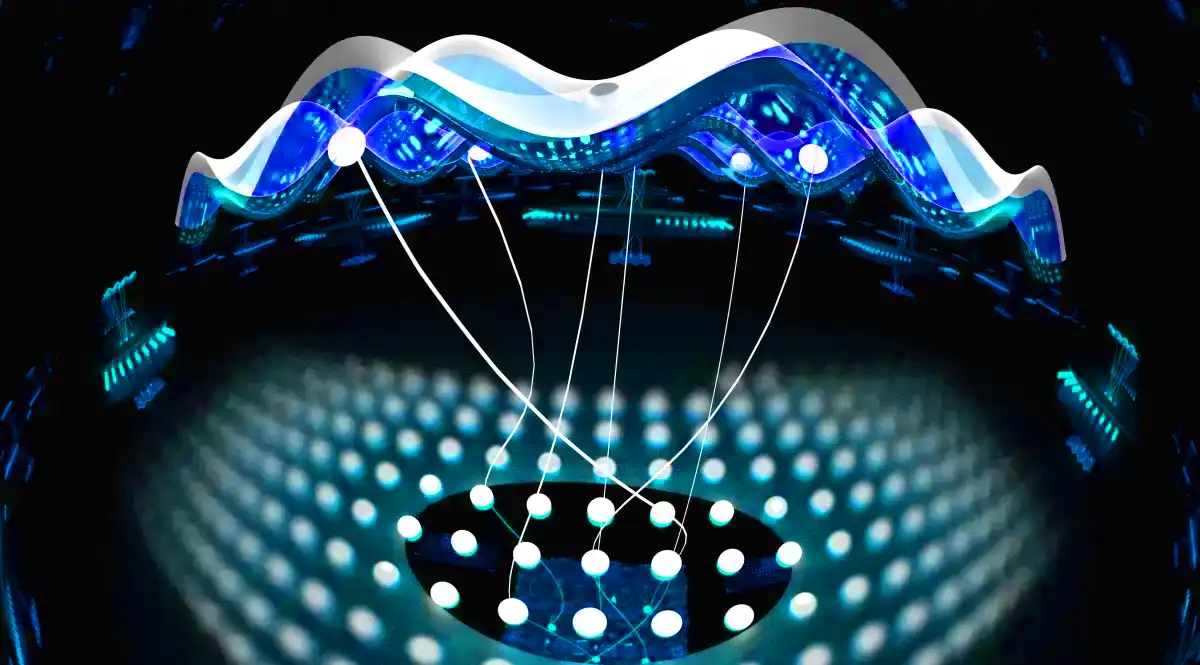

When studying quantum physics or its applications (including the development of quantum computers), researchers often rely on a detailed description of many interacting quantum particles. But the very features that make quantum computing potentially powerful also make quantum systems difficult to describe using current computers. In some instances, machine learning has produced descriptions that capture the most significant features of quantum systems while ignoring less relevant details—efficiently providing useful approximations. An artistic rendering of a neural network consisting of two layers. The top layer represents a real collection of quantum particles, like atoms in an optical lattice. The connections with the hidden neurons below account for the particles’ interactions. (Credit: Modified from original artwork created by E. Edwards/JQI)

An artistic rendering of a neural network consisting of two layers. The top layer represents a real collection of quantum particles, like atoms in an optical lattice. The connections with the hidden neurons below account for the particles’ interactions. (Credit: Modified from original artwork created by E. Edwards/JQI)

In a paper published May 20, 2024, in the journal Physical Review Research, two researchers at JQI presented new mathematical tools that will help researchers use machine learning to study quantum physics. And using these tools, they have identified new opportunities in quantum research where machine learning can be applied.

“I want to understand the limit of using traditional classical machine learning tools to understand quantum systems,” says JQI graduate student Ruizhi Pan, who was the first author of the paper.

The standard tool for describing collections of quantum particles is the wavefunction, which provides a complete description of the quantum state of the particles. But obtaining the wavefunction for more than a handful of particles tends to require impractical amounts of time and resources.

Researchers have previously shown that AI can approximate some families of quantum wavefunctions using fewer resources. In particular, physicists, including CMTC Director and JQI Fellow Sankar Das Sarma, have studied how to represent quantum states using neural networks—a common machine learning approach in which webs of connections handle information in ways reminiscent of the neurons firing in a living brain. Artificial neural networks are made of nodes—sometimes called artificial neurons—and connections of various strengths between them.

Today, neural networks take many forms and are applied to diverse applications. Some neural networks analyze data, like inspecting the individual pixels of a picture to tell if it contains a person, while others model a process, like generating a natural-sounding sequence of words given a prompt or selecting moves in a game of chess. The webs of connections formed in neural networks have proven useful at capturing hard-to-identify relationships, patterns and interactions in data and models, including the unique interactions of quantum particles described by wavefunctions.

But neural networks aren’t a magic solution to every situation or even to approximating every wavefunction. Sometimes, to deliver useful results, the network would have to be too big and complex to practically implement. Researchers need a strong theoretical foundation to understand when they are useful and under what circumstances they fall prey to errors.

In the new paper, Pan and JQI Fellow Charles Clark investigated a type of neural network called a restricted Boltzmann machine (RBM), in which the nodes are split into two layers and connections are only allowed between nodes in different layers. One layer is called the visible, or input, layer, and the second is called the hidden layer, since researchers generally don’t directly manipulate or interpret it as much as they do the visible layer.

“The restricted Boltzmann machine is a concept that is derived from theoretical studies of classical ‘spin glass’ systems that are models of disordered magnets,” Clark says. “In the 1980s, Geoffrey Hinton and others applied them to the training of artificial neutral networks, which are now widely used in artificial intelligence. Ruizhi had the idea of using RBMs to study quantum spin systems, and it turned out to be remarkably fruitful.”

For RBM models of quantum systems, physicists frequently use each node of the visible layer to represent a quantum particle, like an individual atom, and use the connections made through the hidden layer to capture the interactions between those particles. As the size and complexity of quantum states grow, a neural net increasingly needs more and more hidden nodes to keep up, eventually becoming unwieldy.

However, the exact relationships between the complexity of a quantum state, the number of hidden nodes used in a neural network, and the resulting accuracy of the approximation are difficult to pin down. This lack of clarity is an example of the black box problem that permeates the field of machine learning. It exists because researchers don’t meticulously engineer the intricate web of a neural network but instead rely on repeated steps of trial and error to find connections that work. This approach often delivers more accurate or efficient results than researchers know how to achieve by working from first principles, but it doesn’t explain why the connections that make up the neural network deliver the desired result—so the results might as well have come from a black box. This built-in inscrutability makes it difficult for physicists to know which quantum models are practical to tackle with neural networks.

Pan and Clark decided to peek behind the veil of the hidden layer and investigate how neural networks boil down the essence of quantum wavefunctions. To do this, they focused on neural network models of a one-dimensional line of quantum spins. A spin is like a little magnetic arrow that wants to point along a magnetic field and is key to understanding how magnets, superconductors and most quantum computers function.

Spins naturally interact by pushing and pulling on each other. Through chains of interactions, even two distant spins can become correlated—meaning that observing one spin also provides information about the other spin. All the correlations between particles tend to drive quantum states into unmanageable complexity.

Pan and Clark did something that at first glance might not seem relevant to the real world: They imagined and analyzed a neural network that uses infinitely many hidden nodes to model a fixed number of spins.

“In reality of course we don't hope to use a neural network with an infinitely large system size,” Pan says. “We often want to use finite size neural networks to do the numerical computations, so we need to analyze the effects of doing truncations.”

Pan and Clark already knew that using more hidden nodes generally produced more accurate results, but the research community only had a fuzzy understanding of how the accuracy suffers when fewer hidden nodes are used. By backing up and getting a view of the infinite case, Pan and Clark were able to describe the hypothetical, perfectly accurate representation and observe the contributions made by the infinite addition of hidden nodes. The nodes don’t all contribute equally. Some capture the basics of significant features, while many contribute small corrections.

The pair developed a method that sorts the hidden nodes into groups based on how much correlation they capture between spins. Based on this approach, Pan and Clark developed mathematical tools for researchers to use when developing, comparing and interpreting neural networks. With their new perspective and tools, Pan and Clark identified and analyzed the forms of errors they expect to arise from truncating a neural network, and they identified theoretical limits on how big the errors can get in various circumstances.

In previous work, physicists generally relied on restricting the number of connections allowed for each hidden node to keep the complexity of the neural network in check. This in turn generally limited the reach of interactions between particles that could be modeled—earning the resulting collection of states the name short-range RBM states.

Pan and Clark’s work revealed a chance to apply RBMs outside of those restrictions. They defined a new group of states, called long-range-fast-decay RBM states, that have less strict conditions on hidden node connections but that still often remain accurate and practical to implement. The looser restrictions on the hidden node connections allow a neural network to represent a greater variety of spin states, including ones with interactions stretching farther between particles.

“There are only a few exactly solvable models of quantum spin systems, and their computational complexity grows exponentially with the number of spins,” says Clark. “It is essential to find ways to reduce that complexity. Remarkably, Ruizhi discovered a new class of such systems that are efficiently attacked by RBMs. It’s the old hero-returns-home story: from classical spin glass came the RBM, which grew up among neural networks, and returned home with a gift of order to quantum spin systems.”

The pair’s analysis also suggests that their new tools can be adapted to work for more than just one-dimensional chains of spins, including particles arranged in two or three dimensions. The authors say these insights can help physicists explore the divide between states that are easy to model using RBMs and those that are impractical. The new tools may also guide researchers to be more efficient at pruning a network’s size to save time and resources. Pan says he hopes to further explore the implications of their theoretical framework.

“I'm very happy that I realized my goal of building our research results on a solid mathematical basis,” Pan says. “I'm very excited that I found such a research field which is of great prospect and in which there are also many unknown problems to be solved in the near future.”

Original story by Bailey Bedford: https://jqi.umd.edu/news/attacking-quantum-models-ai-when-can-truncated-neural-networks-deliver-results