Of all the moonbeam-holding chip technologies out there, two stand the tallest: the evocatively named whispering gallery mode microrings, which are easy to manufacture and can trap light of many colors very efficiently, and photonic crystals, which are much trickier to make and inject light into but are unrivaled in their ability to confine light of a particular color into a tiny space—resulting in a very large intensity of light for each confined photon.

Recently, a team of researchers at JQI struck upon a clever way to combine whispering gallery modes and photonic crystals in one easily manufacturable device. This hybrid device, which they call a microgear photonic crystal ring, can trap many colors of light while also capturing particular colors in tightly confined, high-intensity bundles. This unique combination of features opens a route to new applications, as well as exciting possibilities for manipulating light in novel ways for basic research.

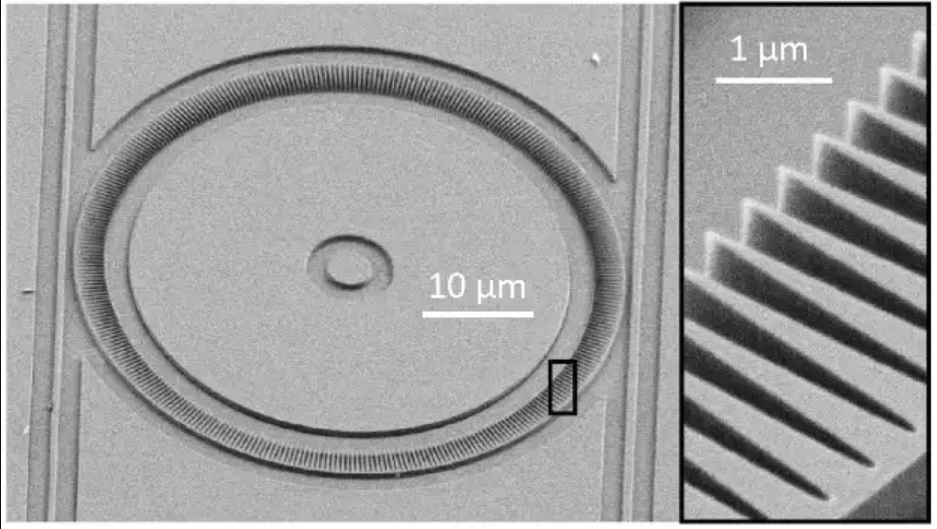

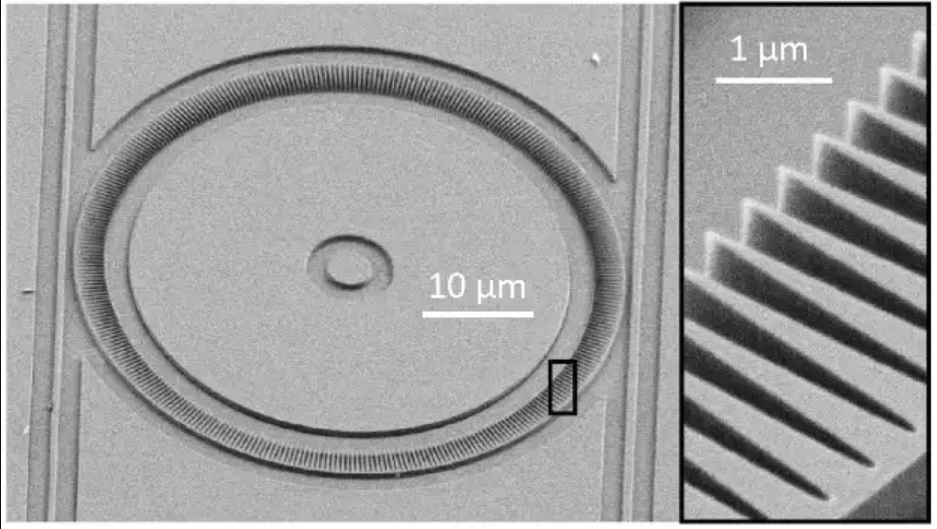

“There are potential applications, like single photon sources and quantum gates,” says Adjunct Professor Scanning electron microscope image of a novel photonic microring with micron-scale gears patterned inside a larger circle. (Credit: Kartik Srinivasan/JQI) Kartik Srinivasan, who is also a fellow of the National Institute of Standards and Technology (NIST). “But a part of it is also fun electromagnetism and fun optical phenomena in these devices.”

Scanning electron microscope image of a novel photonic microring with micron-scale gears patterned inside a larger circle. (Credit: Kartik Srinivasan/JQI) Kartik Srinivasan, who is also a fellow of the National Institute of Standards and Technology (NIST). “But a part of it is also fun electromagnetism and fun optical phenomena in these devices.”

The team introduced their device in a paper published in the journal Nature Photonics in 2021, and they showed off more of what it can do in a paper published recently in the journal Physical Review Letters.

Whispering gallery mode (WGM) microrings are named after the gallery inside St. Paul’s Cathedral, a masterpiece of Baroque architecture that towers over London. Whispers in the Cathedral can be heard anywhere within the gallery because the sound gets trapped by the round walls and reflected back inside. Similarly, optical WGMs trap light in a ring, typically about a tenth of a millimeter in diameter, made of silica or another material that is transparent to optical light. Light of the right color travels round and round the ring many thousands of times before leaking out, producing a high light intensity in a small volume. Building a WGM microring that traps the desired color with minimal loss, as well as getting the light into the ring, is relatively straightforward for a wide range of colors.

Photonic crystals can confine light to much smaller volumes—sometimes less than one wavelength across. They achieve this with a carefully crafted periodic structure made up of a grid of holes or posts in a chip. The regular grid reflects light of a very specific color, and a small, intentionally introduced imperfection in the grid—called a defect—accumulates the light within the surrounding reflecting grid, trapping it in a tiny space. Photonic crystals are unrivaled in comparison to WGMs in terms of the light intensity they can create per photon, but they require very detailed electromagnetic design and precise manufacturing to implement in practice. Moreover, photonic crystals that can trap multiple colors have been challenging to realize.

The new hybrid ring is easy to manufacture and guide light into like WGMs, but it also provides extra localization for particular colors, like photonic crystals. The design of this hybrid is surprisingly simple. The researchers created a regular microring out of silicon nitride, a hollow circle much like the gallery in St. Paul’s Cathedral. To add a photonic crystal element, they cut notches into the inside wall of their ring, making it resemble a gear. It turned out that adding the gear notches inside the ring didn’t reduce the number of times the light would go around before leaking out—the ring trapped light just as well as before. Moreover, to add a defect, the researchers simply modified the size of a few of the notches. Finally, the microgears confine just a few colors of light into tight bundles, while allowing other colors to circle around the microring freely.

“People have been saying for a long time that microrings and photonic crystals have complementary strengths, and so it would be great to put them together to get the best of both worlds,” Srinivasan says. “But in general, when people put them together this didn’t happen – sometimes you could even get the worst of both worlds. The notion that you can stick a photonic crystal into a microring with this kind of strength and modulation, while retaining a high quality factor (low loss), has actually been rather surprising for a lot of people, myself included.”

In their combined design, Srinivasan’s team showed that they could confine the light into a space more than ten times smaller than previous WGMs, enabling a higher optical intensity than in conventional WGMs. And they preserved some of best qualities of the WGMs, including a high quality factor (the light going around the ring several thousand times before leaking out) and the ease of getting light into and out of the ring. Perhaps most importantly, the design and manufacture of these hybrid devices remains straightforward for different colors of light and other parameters.

“In our work it’s basically the purest, simplest photonic crystal,” says Xiyuan Lu, an assistant research scientist at NIST and JQI and an author on both publications. “Which is why you don't need to carry out any simulation. You can know [how to design properties] intuitively.”

After adding the microgear notches to the device last year, the team went on to extend its capabilities and detailed the performance in their more recent work. They put multiple defects into the notch pattern, with each defect created by making a few of the gear teeth shorter than the surrounding ones. Each defect confines light to a small fraction of the circumference of the microring, much like in a photonic crystal. They were able to put up to four defects into the same microring, confining light in four places and building up high intensities in a tightly confined space.

They found another unique feature of this microgear approach. The microgear can control different colors of light in different ways at the same time. Certain colors will get trapped in the defects and confined to a volume much smaller than the ring itself. At the same time, other colors can circulate freely around the microring, unconfined by the defects but still influenced by the gear structure, giving researchers extra control over the light beam.

In a normal WGM, the electromagnetic field that makes up a beam or a pulse of light wraps around the microring, forming a standing wave. If you were to ride along this wave, it would take you up and down along the edge of the ring, going through a number of peaks and troughs before dropping you back where you started. Although the number of peaks and troughs can be predicted, where exactly in the ring they will line up is completely random.

“If everything is symmetric, light can stand anywhere it likes,” says Lu. “But now we can control it.”

By placing the microgears and defects, the researchers can control exactly where in the microring the peaks and troughs of the free-floating color will end up. And they can even wrap it around in unintuitive ways, creating something akin to a Möbius strip out of light—a circular structure you’d have to traverse twice in order to end up where you started.

In addition to fun with electromagnetism, these microgears open up possible applications in several realms, including non-linear optics, where light interacts with the matter it travels through to produce new colors and directions.

“In photonic crystals, you can kind of engineer one mode pretty well,” Srinivasan says. “But it’s difficult to engineer multiple modes simultaneously. With this device, we can envision mixing between different colors of light that we can really engineer the modes of while having these additional resources of strong confinement and high intensity.”

Another promising application is in the realm of cavity quantum electrodynamics: the fundamental study of the interactions between atoms and light. The approach is to trap single atoms or quantum dots near a localized, intense beam of light and study their behavior. This also allows for the control of quantum matter with light.

“We have a platform now where it’s straightforward for us to have multiple sites within one of these resonators that can host single quantum emitters,” Srinivasan says.

These potential applications have not been demonstrated yet, but the researchers are confident that this new tool will find many uses. Among its strongest advantages is how easy it is to design, fabricate and work with.

“In our case, the platform seems to be quite forgiving,” Lu says. “If you do anything new, chances are it can work well.”

Original story by Dina Genkina: https://jqi.umd.edu/news/two-light-trapping-techniques-combine-best-both-worlds

In addition to Lu and Srinivasan, authors on the papers included Mingkang Wang, a postdoctoral associate at NIST; Feng Zhou, a research associate at NIST; Andrew McClung, a former postdoctoral researcher at the University of Massachusetts Amherst now at Raytheon; Marcelo Davanco, a research scientist at NIST; and Vladimir Aksyuk, the project leader in the Photonics and Optomechanics Group at NIST.

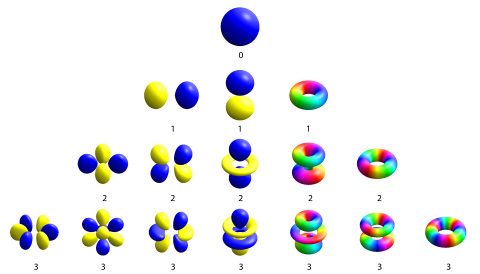

Atomic orbitals at different angular momentum values (labeled with numbers) form a variety of shapes. (Credit: adapted from Geek3, CC BY-SA 4.0, via Wikimedia Commons)

Atomic orbitals at different angular momentum values (labeled with numbers) form a variety of shapes. (Credit: adapted from Geek3, CC BY-SA 4.0, via Wikimedia Commons)