Quantum Gases Keep Their Cool, Prompting New Mysteries

- Details

- Published: Tuesday, September 27 2022 02:46

Quantum physics is a notorious rule-breaker. For example, it makes the classical laws of thermodynamics, which describe how heat and energy move around, look more like guidelines than ironclad natural laws.

In some experiments, a quantum object can keep its cool despite sitting next to something hot that is steadily releasing energy. It’s similar to reaching into the oven for a hot pan without a mitt and having your hand remain comfortably cool.

For an isolated quantum object, like a single atom, physicists have a good idea why this behavior sometimes happens. But many researchers suspected that any time several quantum objects got together and started bumping into each other the resulting gang of quantum particles would be too disorganized to pull off this particular violation of the laws of thermodynamics.

A new experiment led by David Weld, an associate professor of physics at the University of California, Santa Barbara (UCSB), in collaboration with Professor Victor Galitski of the Joint Quantum Institute, shows that several interacting quantum particles can also keep their cool—at least for a time. In a paper(link is external) published Sept. 26, 2022 in the journal Nature Physics, Galitski, who is also a Chesapeake Chair Professor of Theoretical Physics in the Department of Physics at UMD, and the researchers at UCSB describe the experiment, which is the first to explore this behavior, called dynamical localization, with interactions included.

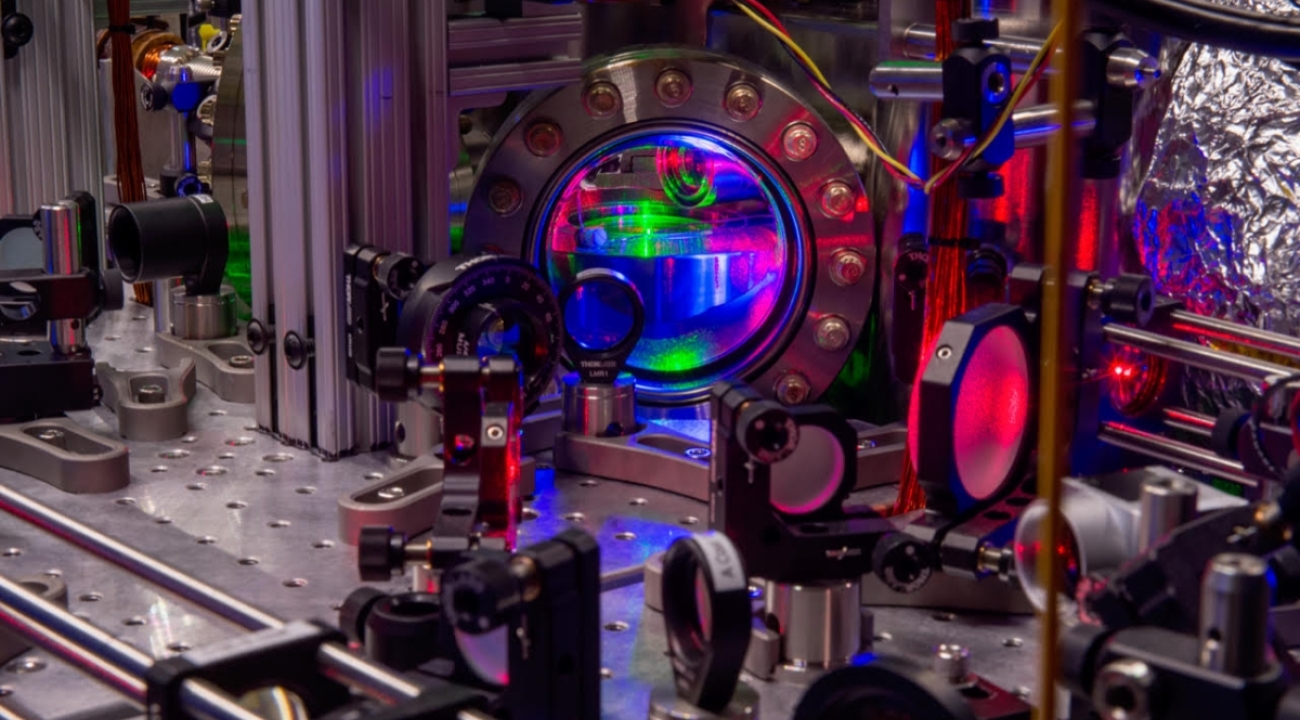

The experiment builds on theoretical predictions made by Galitski and his colleagues, and the results reveal mysteries for the researchers to pursue concerning what the particles are doing in the experiment. Uncovering exactly how the particles can break a revered law of thermodynamics might provide significant insight into how quantum effects and interactions combine—and those insights might find uses in the designs of quantum computers, which will necessarily contain many interacting particles. Equipment at the University of California, Santa Barbara used to create clouds of Lithium atoms. It was used to study how atoms absorb energy when they have various levels of interaction with each other. (Credit: Tony Mastres, UCSB)

Equipment at the University of California, Santa Barbara used to create clouds of Lithium atoms. It was used to study how atoms absorb energy when they have various levels of interaction with each other. (Credit: Tony Mastres, UCSB)

“The big question is whether this phenomenon can survive in systems which are actually of interest,” Galitski says. “This is the first exploration of the fate of this very interesting phenomenon of non-heating as a function of interactions.”

For a single particle, physicists have the math to explain how quantum mechanical waves of probability sway and crash together in just the right way that crests and troughs meet and cancel out any possibility of the particle absorbing energy. Galitski and his colleagues decided to tackle the more complicated case of investigating if the same behavior can occurs when multiple particles interact. They predicted that in the right circumstances repeated kicks of energy would warm up the collection of particles but that at a certain point the temperature would plateau and refuse to go up anymore.

The next natural step was to confirm that this behavior can happen in a lab and that their math wasn’t missing some crucial detail of reality. Fortunately, the idea intrigued Weld, who had the right experimental equipment for testing the theory—almost. His lab can set up quantum particles with the needed interactions and supply of energy to attempt to defy thermodynamics; they used lasers to trap a quantum gas of lithium atoms and then steadily pumped energy at the atoms with laser pulses.

But there was a catch: To keep the math manageable, Galitski’s theory was calculated for particles confined to live on a one-dimensional line, and it’s not easy for Weld and his team to keep the cloud of atoms that tightly constrained. Atoms in a gas naturally explore and interact in three dimensions even when confined in a slender trap. The team made their cloud of atoms long and narrow, but the extra wiggle room tends to significantly impact the quantum world of atoms.

“With just a few discussions, the basic picture of what we wanted to do was clear quite quickly,” Weld says. “Though the experiment turned out to be quite challenging and it took a lot of effort in the lab to make it all work!"

While Weld’s lab couldn’t do the experiment in one dimension, they could easily control how strong the interactions were between atoms. So, the team started with the well-understood case where particles weren’t interacting and then observed how things changed as they increased the interaction strength.

“So, they didn't actually do exactly what we wanted them to do, in a one-dimensional system, because they just don't have one-dimensional systems,” Galitski says, “but they did what they could do. There is this kind of a tension, which is common, that what’s easy theoretically is usually difficult experimentally, and vice versa.”

When the particles weren’t interacting, the researchers saw the expected result: the particles heating up a little before reaching a constant temperature. Then, when they adjusted the experiment so that the atoms could interact a little, they still saw the temperature plateau at the same level. But unlike in the one dimensional theory, the atoms eventually started heating up again—although not as quickly as predicted by normal thermodynamics. When they increased the level of interactions, the temperature plateaued for a shorter time.

While Galitski’s one-dimensional theory doesn’t describe the exact experiment performed, another theory seems to have some luck explaining the sluggish heating that follows the plateaus. That theory applies to very cold groups of particles that have formed a Bose Einstein condensate, a phase of matter where all the particles share the same quantum state. The equations that describe Bose-Einstein condensates can predict the rate of the slow heating—despite that very heating meaning that the atoms shouldn’t be describable as a Bose Einstein condensate.

“So, in some sense, it's a double mystery,” Galitski says. “We actually don't know why it goes this way, but there is a theory which is not supposed to work but kind of works.”

The observed plateaus prove that interactions don’t always force particles to bow to the decrees of thermodynamics. Efforts to push experiments to test the predictions for particles constrained to one dimension and to push the theory to explain the three-dimensional experiments might not only reveal new quantum physics but could also lead to the development of new research tools. If the physics behind these experiments can be untangled, perhaps the plateaus will one day be extended and can be used to design new and better quantum technologies.

“Mysteries are always good because they lead oftentimes to new discoveries,” Galitski says. “What would be nice is to see whether you can stabilize the dynamic localization—this plateau—under some protocols and conditions. That's what they're working on. And it's important because it would preserve quantum information.”

In addition to Galitski and Weld, former UCSB physics student Alec Cao; UCSB graduate students Roshan Sajjad, Ethan Q. Simmons, Jeremy L. Tanlimco and Eber Nolasco-Martinez; former UCSB postdoctoral researcher Hector Mas; and UCSB postdoctoral researchers Toshihiko Shimasaki and H. Esat Kondakci were also co-authors of the paper.

Original story by Bailey Bedford: https://jqi.umd.edu/news/quantum-gases-keep-their-cool-prompting-new-mysteries